Inverting images in dark mode, many shenanigans, and it's always StackOverflow

The Problem

Something that has been troubling me for a while is attempting to take a PNG with a transparent background and black strokes, and display it on a dark background. I've finally found my holy grail of a solution! It's Machine learning!

I found this while doing my regular scour of the internet when I'm confused about something like the CSS filter property, and it's beautiful ✨.

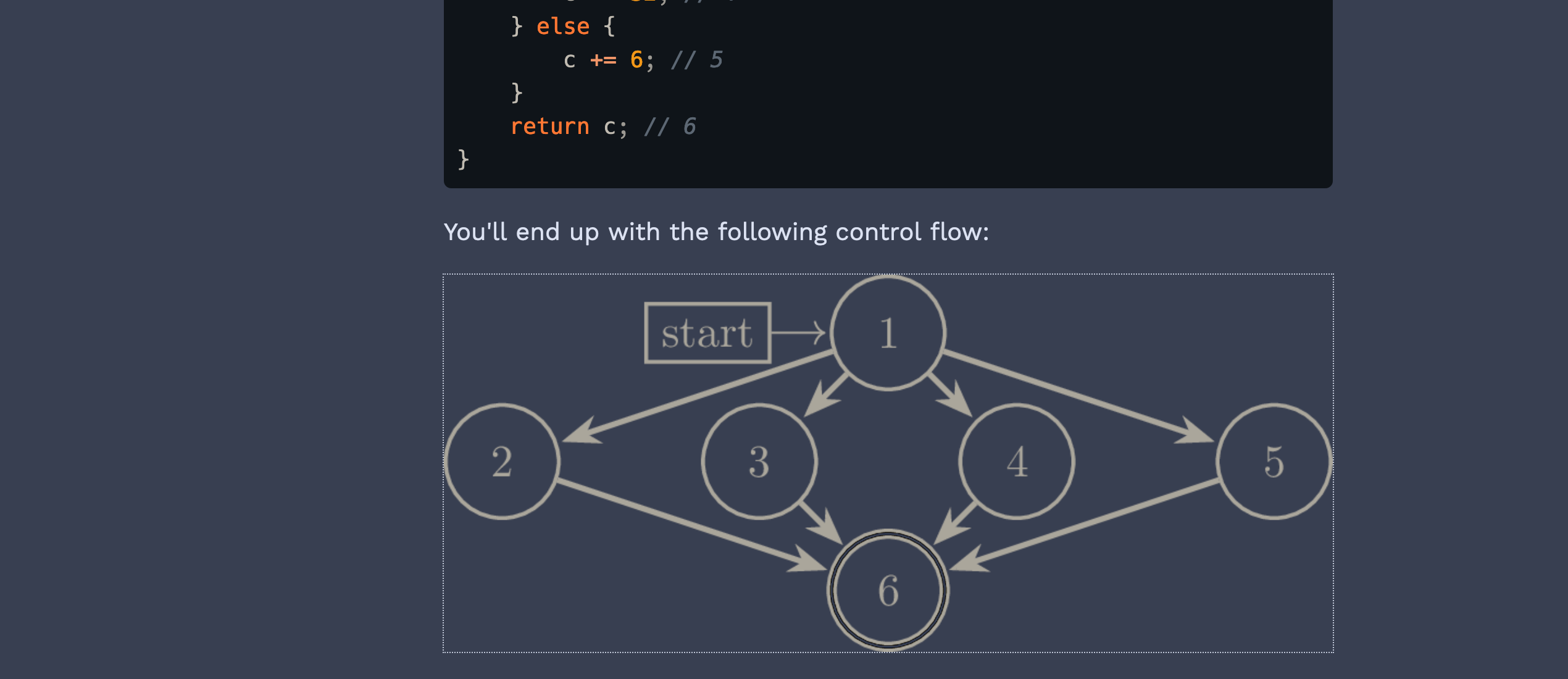

I needed to color the headphone boi, and I had messed around with filter: invert() and such while trying to help Addison Crump with his LaTeX graph diagram displaying on dark mode

Mixed Flow Graph on the Wayback Machine

.

We had tried various options, such as a simple

.diagram {

filter: invert();

}

which turns the diagram from black to white, which is close, but it's a little harsh when the rest of the elements are a more gentle tone. We experimented with filter: sepia() to add some color, but Addison settled on using

.diagram {

filter: invert();

mix-blend-mode: difference;

}

mix-blend-mode tells CSS how to combine the element colors with it's background colors, as far as I understand it, so setting mix-blend-mode: difference takes the difference the white of the diagram with the steely blue of the background, resulting in a tone that looks like old book paper to me.

Tada you can see the original solution at Mixed-Flow Graphs Mixed-Flow Graphs on the Wayback Machine

It does a great job, and does a great, simple job of making the diagram fit into the surrounding vibe, but it's not quite the same as being able to set the diagram to the same as the text color.

But what if we could?

from https://xkcd.com/1838/ xkcd 1838 on the Wayback Machine . thanks for all the laughs.

The Solution

What I found on StackOverflow was a ludicrous idea: implement the browser CSS filter functions as JavaScript functions and then setup a small loss function and gradient descent to search the space of what filters will get you the closest color to your input color when applied to a black image with a transparent background.

CSS filter is based on a bunch of primitives, like sepia() and invert() and hue-rotate, but on the backend they essentially implement an <feColorMatrix> or other SVG filter types.

So in the solution

Accepted StackOverflow Solution on the Wayback Machine

via a codepen reimplementation

CodePen Reimplementation of the solution on the Wayback Machine

, they implemented sepia(), saturate(), hue-rotate(), brightness(), and contrast() as JavaScript functions that implement the matrix transform as a series of multiplications.

That then is fed into an SPSA, or a Simultaneous Perturbation Stochastic Approximation, which receives an array of percentages, representing the percentages passed to each of the filter primitives in filter: sepia([1]) saturate([2]) hue-rotate([3]) brightness([4]) contrast([5]).

Then, a loss function is set up for the SPSA so that it can judge the performance of a particular set of filter percentages. They used the RGB difference, since that is the end goal to have a filter that results in the same RGB color, in addition to the HSL (Hue Saturation Lightness Color Model) difference, since hue-rotate() vaguely correlates to the Hue in HSL, and saturate() correlates with the Saturation.

So the loss function is this:

function loss(filters) {

let color = new Color(0, 0, 0);

color.invert(filters[0] / 100);

color.sepia(filters[1] / 100);

color.saturate(filters[2] / 100);

color.hueRotate(filters[3] * 3.6);

color.brightness(filters[4] / 100);

color.contrast(filters[5] / 100);

let colorHSL = color.hsl();

return (

Math.abs(color.r - this.target.r) +

Math.abs(color.g - this.target.g) +

Math.abs(color.b - this.target.b) +

Math.abs(colorHSL.h - this.targetHSL.h) +

Math.abs(colorHSL.s - this.targetHSL.s) +

Math.abs(colorHSL.l - this.targetHSL.l)

);

}

Then there is a step where the SPSA is used to solve in a "wide" case, to get in the ballpark of the correct color with at most 25 attempts, but more likely less. After that the SPSA is run to solve in a "narrow" case to refine the color and attempt to perfect the filters.

The algorithm stops when the it has completed the predefined number of iterations. I ended up naively altering the number of iterations in the "narrow" case from 500 iterations to 5000, but according to the solution, I should be using the debug patch of the solution in order to tune the A and a parameters.

N.B. I think I'll update this post in the future with a better understanding of the solution, and hopefully my own iteration of perfecting the design?

N.B. Apparently I'm dumb and you can just do this using

<picture>and media queries, since<picture>lets you embed media queries in it, so you just generate two different images that are served...

References:

- 4 CSS Filters For Adjusting Color : https://vanseodesign.com/css/4-css-filters-for-adjusting-color

- CSS filter generator to convert from black to target hex color : https://codepen.io/sosuke/pen/Pjoqqp

- How to Transform Black into any Given Color using only CSS Filters : https://stackoverflow.com/questions/42966641/how-to-transform-black-into-any-given-color-using-only-css-filters, https://web.archive.org/web/20210220193004/https://stackoverflow.com/questions/42966641/how-to-transform-black-into-any-given-color-using-only-css-filters/43960991

- Why doesn't hue rotation by +180deg and -180deg yield the original color? : https://stackoverflow.com/questions/19187905/why-doesnt-hue-rotation-by-180deg-and-180deg-yield-the-original-color/19325417#19325417 -- This post describes why I wasn't getting anywhere with a simple solution, the post shows a simple setup that nearly worked for me out of the box in the sense that I got a "yellow" out of it.